农历新年的第一场日落🌄

2025 / 1 / 28

和MRL来江桥看日落

实际上这个桥几乎是每年寒暑假一定会去的地方了

冬天的时候我们会在冰面上看日落,夏天的时候我们会在桥上看日落

决定复刻一下6年前的视角👇:

2025复刻版👇

拍日落的时候我和mrl说iPhone的成像系统拥有一颗等效77mm的3倍光学镜头,拍日落再合适不过了

然后他掏出了小米14,切到了60倍变焦😭,可以拍到桥上的卡车

输麻了

走着走着,我们来到裸露的河床,这里曾经是江心岛,只是冬天时水已褪去

可以看到很多有趣的小东西,比如这个田螺

河蚌

这只死绿鸟又开始阴阳起来辣😆

上学期简单的学了些RL的皮毛,最近看了一些现代控制和最优控制的文章,对RL和它们的关系有了进一步的理解

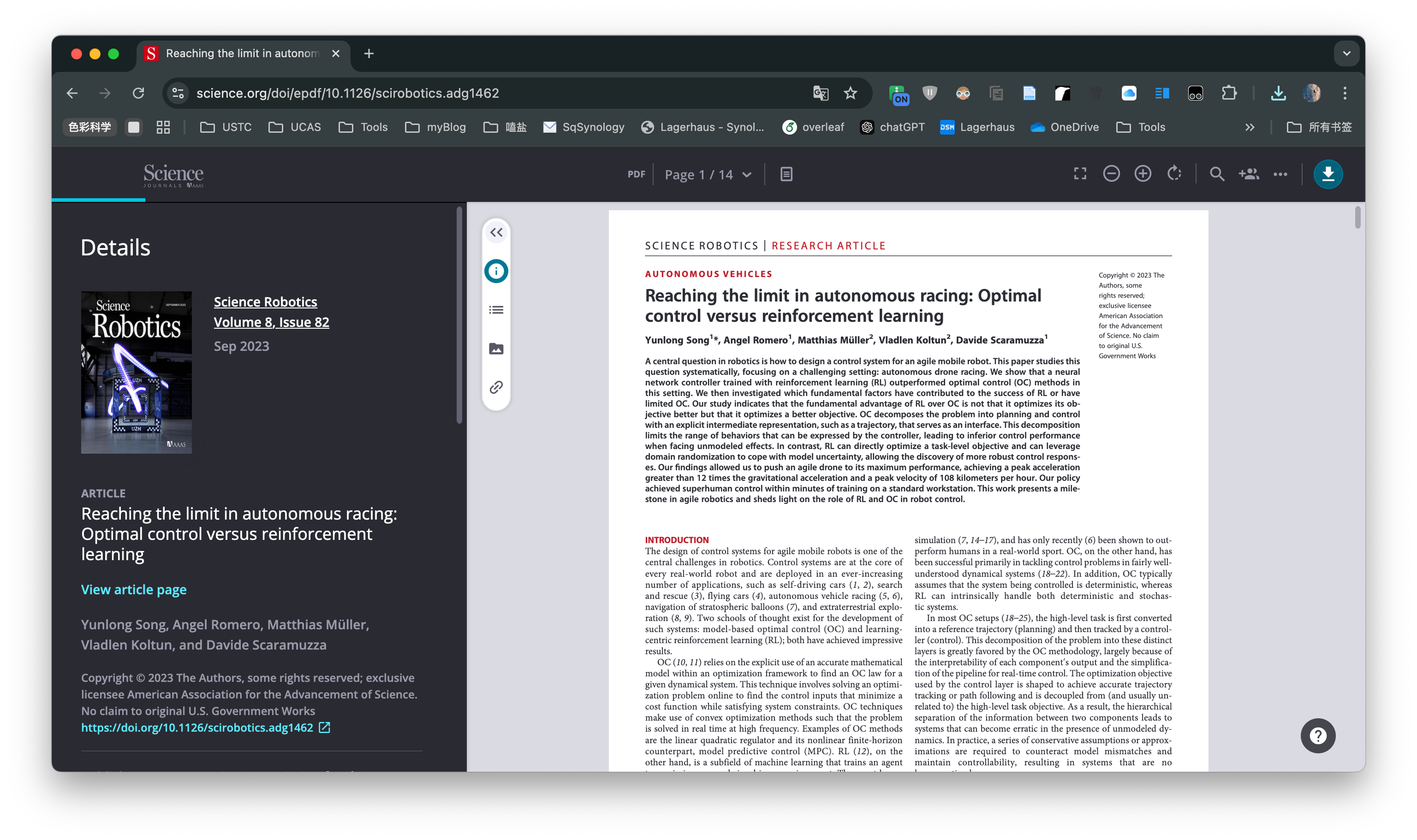

今天发现了一篇UZH发表在Science的神级Paper

虽然没什么开创性理论,但很好的解释了为什么用rl做控制比oc更好,因为能够学习出更好的cost function

RL本质上是在做OC,但其固有特性使得它能够轻松融入额外的机制,例如domain randomization或teacher-student training,这便已经跳出传统OC的框架,而且RL在四足和无人机上已经展现出远超MPC的性能

正如这篇paper在Abstract中所提到的:

We show that a neural network controller trained with reinforcement learning (RL) outperforms optimal control (OC) methods in this setting. We then investigate which fundamental factors have contributed to the success of RL or have limited OC. Our study indicates that the fundamental advantage of RL over OC is not that it optimizes its objective better but that it optimizes a better objective. OC decomposes the problem into planning and control with an explicit intermediate representation, such as a trajectory, that serves as an interface. This decomposition limits the range of behaviors that can be expressed by the controller, leading to inferior control performance when facing unmodeled effects. In contrast, RL can directly optimize a task-level objective and can leverage domain randomization to cope with model uncertainty, allowing the discovery of more robust control responses.

DeepSeek确实牛逼,我也推荐给了好多人,用过的都说好

不过有点担心它们是怎么盈利的

所以准备这几天出一个教程,详细介绍下如何在本地部署DeepSeek🤔